Are you looking for a tutorial that will explain step by step how to make an audit with Screaming frog?

In this article we will explain what is Screaming frog, and all the considerations you need to take into account before using the tool.

Are you ready to learn how to work this SEO Spider?

What is Screaming Frog, and what is it for?

Screaming frog is an SEO Spider tool (semi-free) that allows us to analyze a web of top-to-bottom (either our own, a client, or the competition), to verify the health status of the urls for a site, internal and external links, make a map of the structure of the web...

To all this, the main advantage is that simulates the tracking as they do the same . This is very powerful, as we will return to a detailed analysis as to what Google sees.

By the way, the top of this article, we have left you a video tutorial of Screaming Frog, which we will explain to you everything that you're going to find in this article, but in a more dynamic way.

How does it work and what is it used Screaming Frog?

To know how to use Screaming frog, it is useful at all levels, and more when you use for a SEO project in a professional way. So, in this course of Screaming Frog we are going to tell the main features that you should know if you're still not familiar with this SEO spider.

But... in what situations we are going to be useful to the Screaming Frog?

At the beginning of a project: We are in the situation that comes to us a new website, and we don't know in what state (leaving aside what we can say to the client, as to go on-insurance, it is always better that you check the site with our own eyes). In this initial point, Screaming frog will play a fundamental role within the audit SEO of the website, and therefore we will be helpful to us in, and view broadly as we can find everything. To do this, the main features in which we shall consider are the following:

- Web structure (top Menu > Visualizations > Crawl Tree Graph).

- Health of the urls (Error 4xx, Redirection 3xx, server Errors 500, pages that do not respond).

- Metatags: Check for missing titles or there are repeated, the same with the metadescriptions and headers...

- Canonicals: how Many tags, canonicals there among all the urls? What is missing canonicals?

- URLs: what Are the urls duplicate?

Throughout the project SEO: As we progress in the project, we will be making modifications to the site (quick wins or factors to make quick and visible results of the immediate/short-term). As we make these changes, we must be careful that the website remains the same and has not broken anything. To do this, we must set our focus on the following points:

- Error 4xx

- Redirection 3xx

- Errors server 5xx

- No response

End of the project: To tell you the truth, when we are optimizing a website for SEO, there are always things to do and improve. So mentiríamos if we told you that there exists a point and end in terms of SEO is concerned. So, we will take this point to when we perform a migration, when we move a project from pre-production or test to production (that is to say, to make visible all of the changes to the public and start indexing), or simply when we do actions large that run the risk of breaking any part of the website. Thus, whatever the situation you are in, the points that should be taken into account are the following:

- Health of the urls (4xx, 3xx, 5xx, No response).

- Review of meta tags (Title, meta description, H1, H2...)

- Indexing the urls (see the column “Indexability”).

- Ahreflang

Parts of Screaming Frog

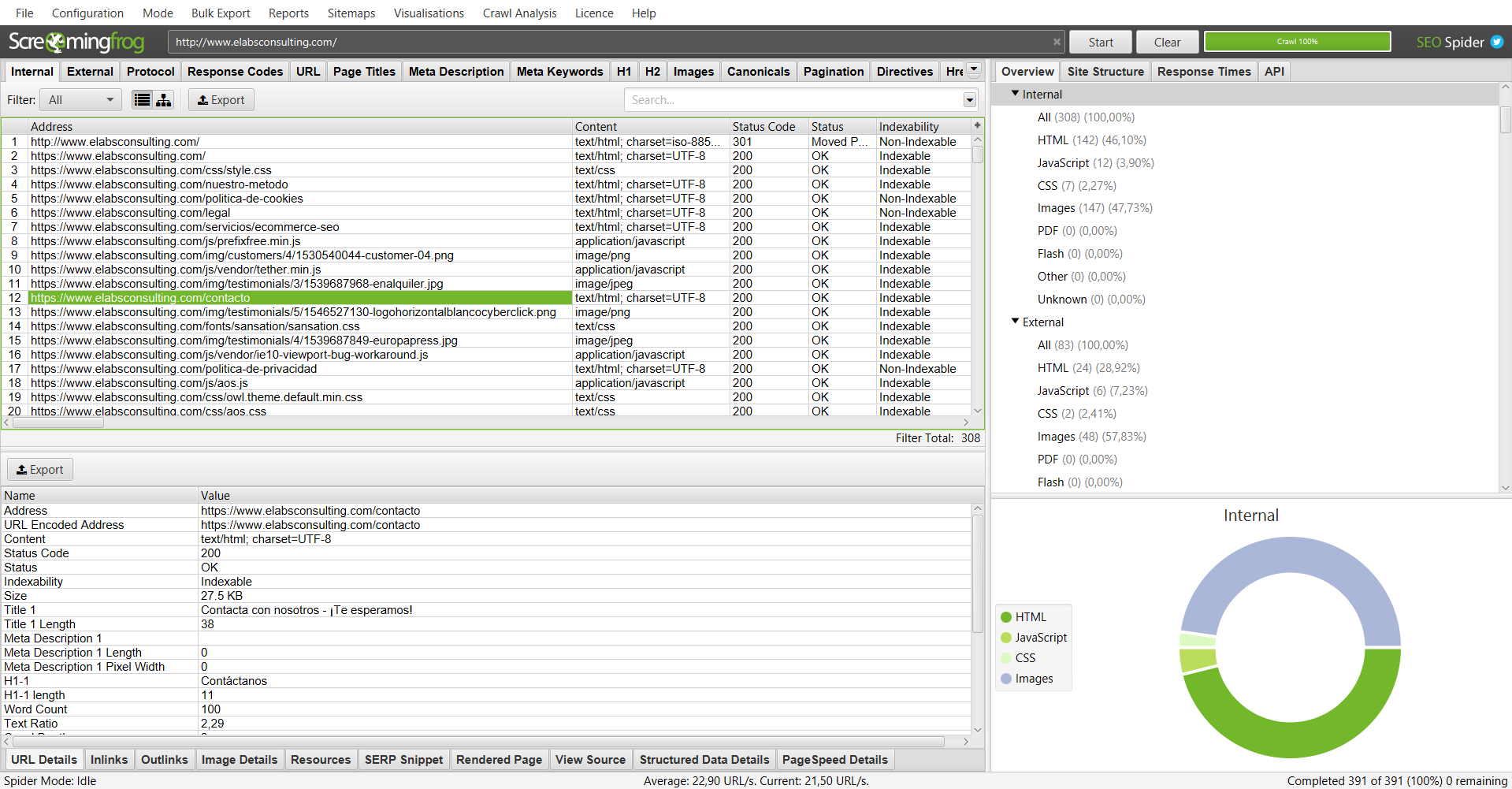

So, once in view of the different situations where we can use Screaming Frog, let's see how is the interface, and what are the major sections that will be most useful to you:

Section 1: The menu.

In this first section, we will find all the advanced options that provides us with the tool Screaming Frog. In it, include features such as crawling through filters, and conditions, to crawl your sitemap, types of search (search by using a main url, or to analyze a list of urls on concrete), export data, and more advanced configurations.

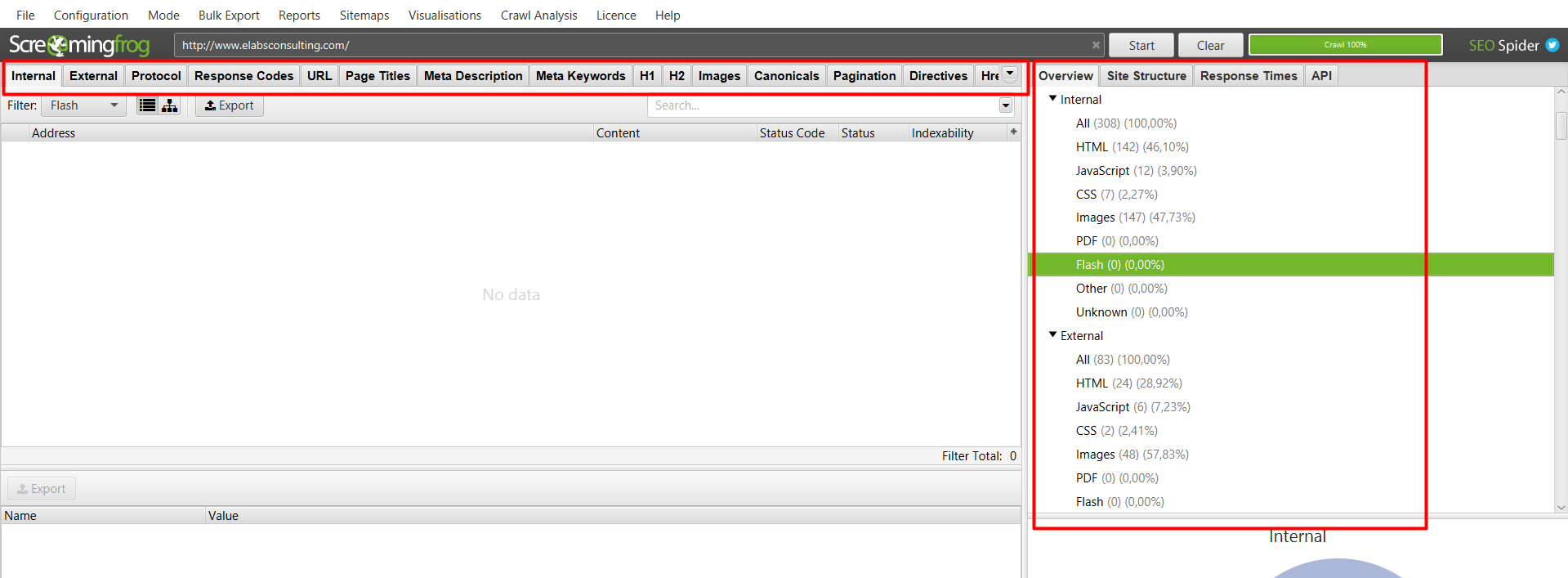

Section 2: common Functionalities (in tabs and deployed)

These two sections are the same. The only difference is the amount of information visible at first sight that we offer. Therefore, as we can see, in the top menu offers us the possibility of getting to the sections more specific by using tabs as a way of shortcut, and to the right instead, we have all of the sections displayed with its functionalities apparently visible.

Section 3: Field of battle

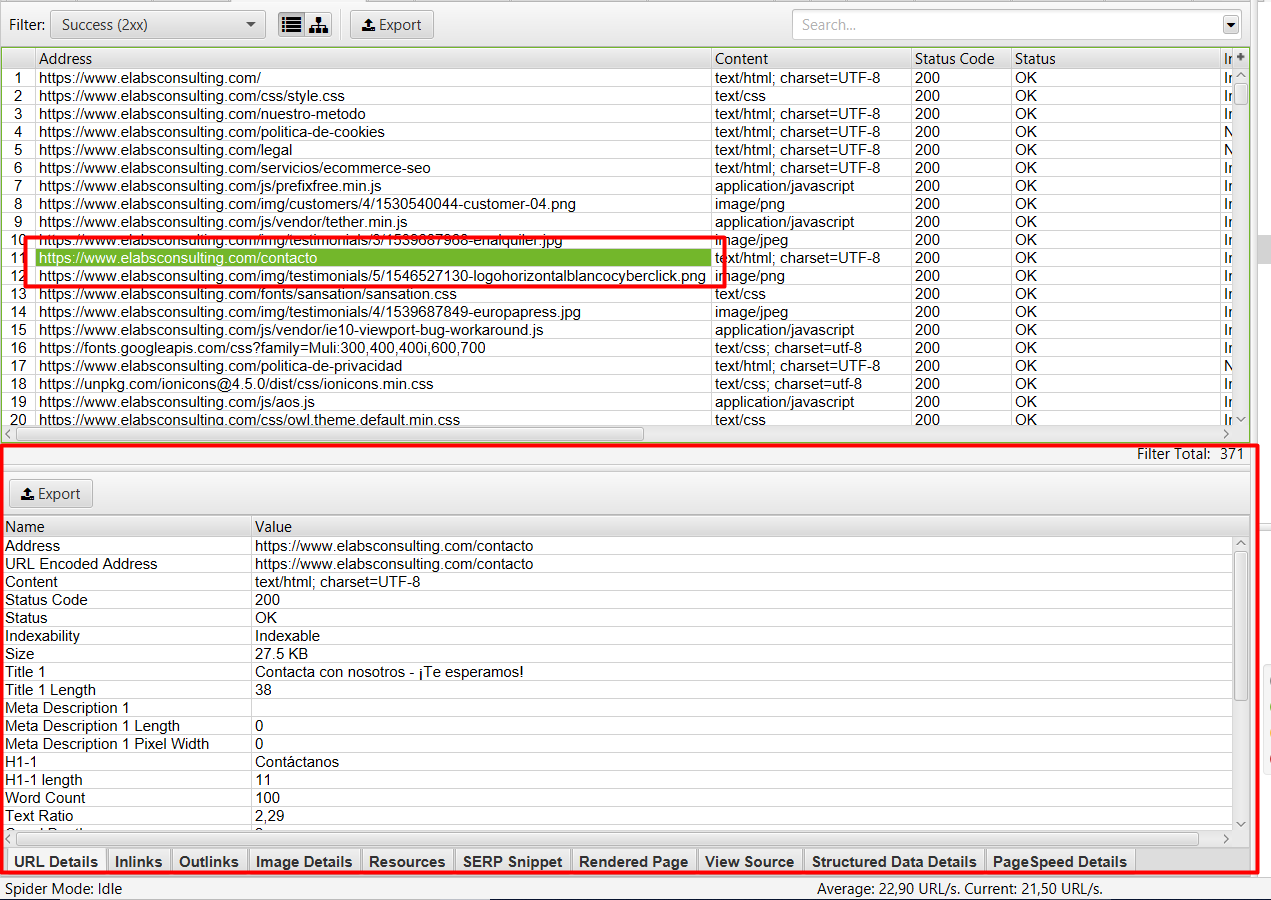

This section is, without a doubt, the most important of all. As it is here where we will see in detail, each of the urls and their main features (if it is indexed or not, in what state, how many inlinks have, what is your response time...).

Even so, in the case that we want to aundar even more in the state of the url and want to see it in more detail, we have the part below where we detailed more explicitly all the data of the selected page:

In this sub-section, we're going to see not only the number of inlinks as we showed in the top category, but that we will be able to see in detail what internal pages are pointing to the selected url, which pages are pointing to this, more specific details of the url as the title, meta description, H1, H2, and length of all of these metatags...

So once views 3 large sections of which it is composed the tool, Screaming Frog, we happened to see each of them in detail:

Checklist of sections to analyze:

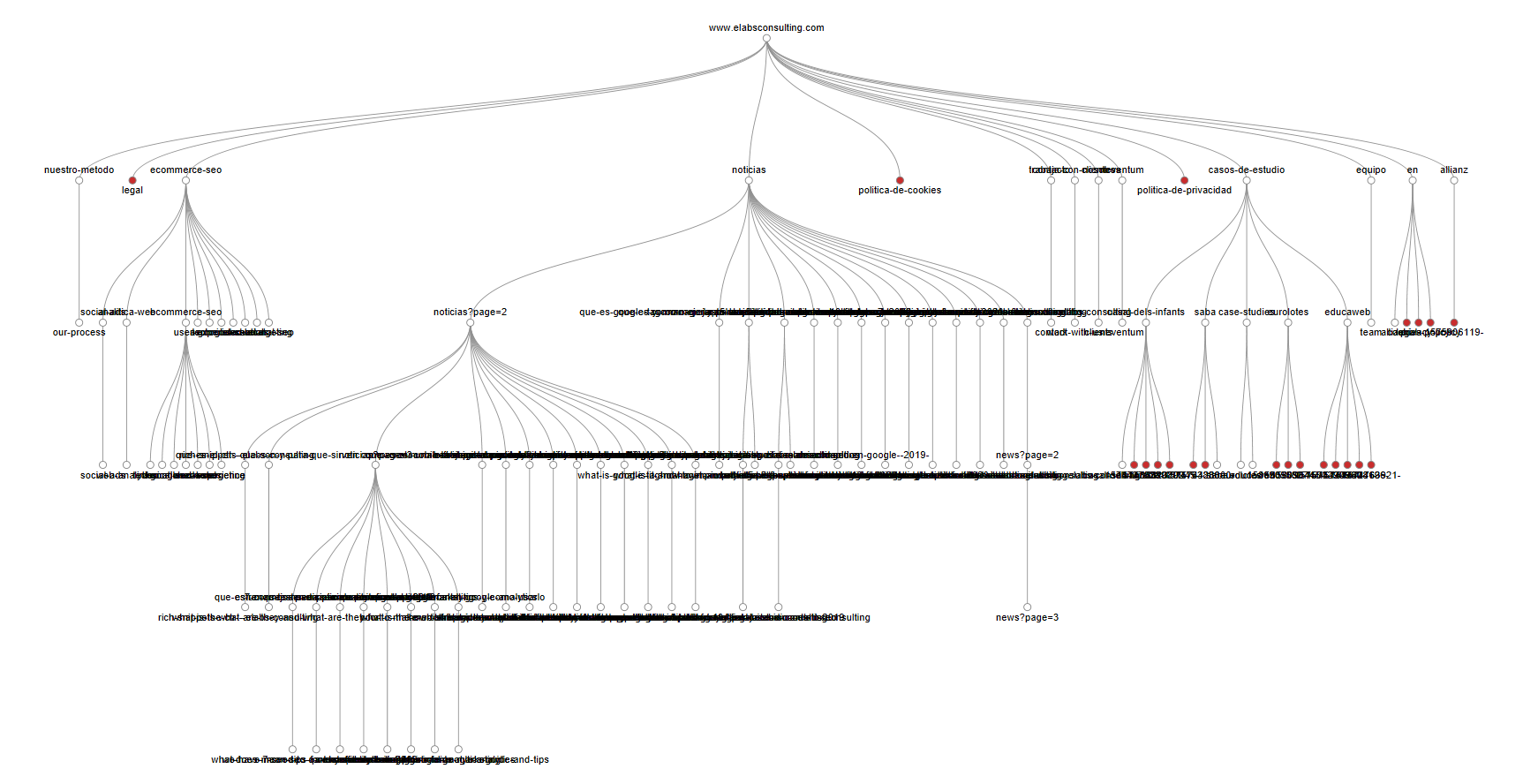

1. Map or web Structure:

This functionality, we will be super useful to get to know a website that comes to us new, and we do not know how you have distributed its structure. Thus, by using this functionality, we can see clearly the categories and subcategories, as well as how close or far it is a page in the root domain.

To access this functionality and be able to see the tree's web, we will have two options:

- Option 1: View > Crawl Tree Graph

- Option 2: Display > Force-Directed Crawl Diagram

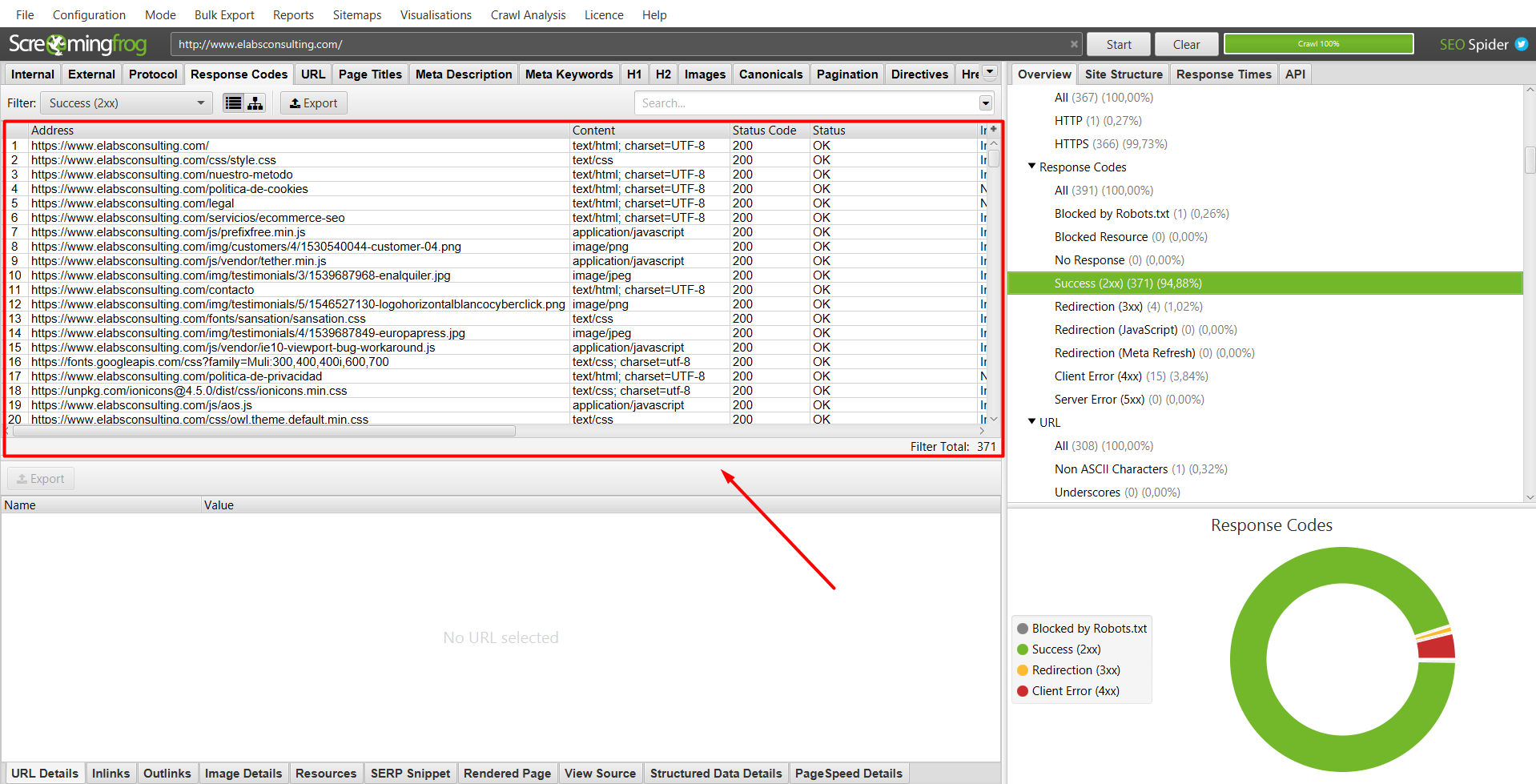

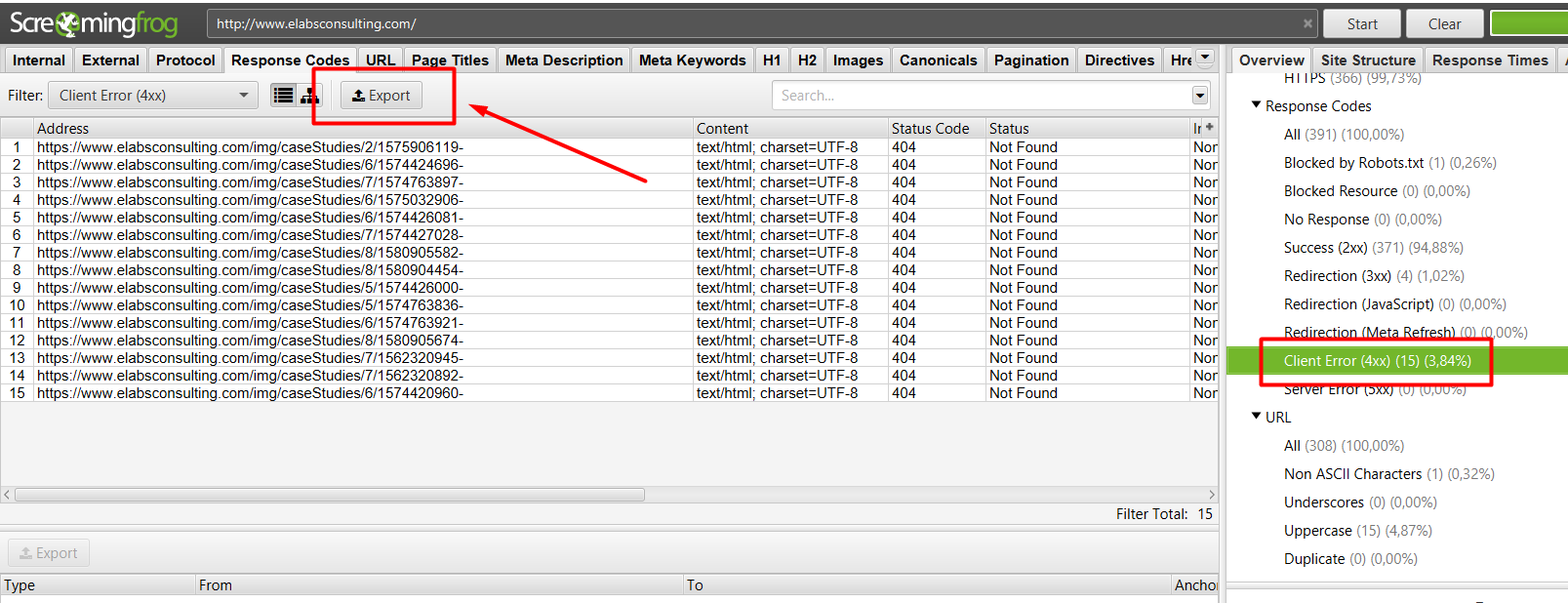

2. State and Health of the URLs.

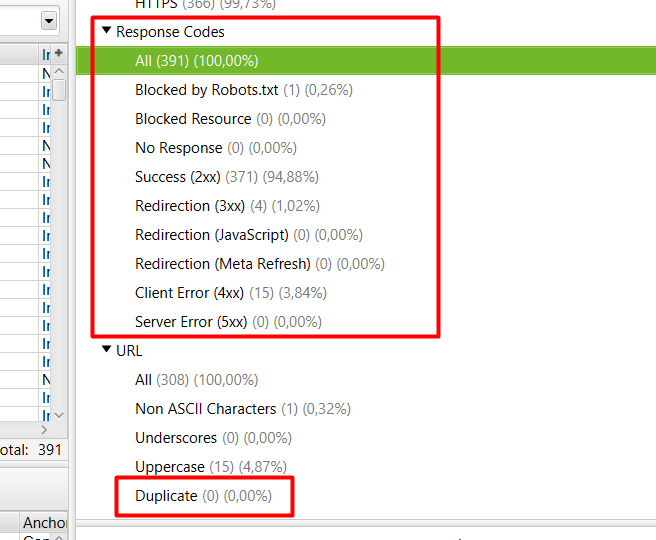

These features are part of the section 2 mentioned earlier. In this section, we will be super useful to know the state of the urls of the web, and what urls are affected. To do this, we must go to the tab “Response Codes”, and select any of the sub-sections that we propose. We will see that by clicking on any of the codes of urls, we will see the pages affected in the main panel.

The features offered by this section, are the following:

- All URLs.

- Blocked by robots.txt.

- Locked resources.

- Pages that do not respond (No response).

- Pages that respond (2xx).

- Redirection 3xx.

- Redirects in Javascript.

- Redirects for Meta Refresh.

- Error 4xx.

- Server errors 5xx.

Note: there are Also other functionality in the following section called “URL”, which will be useful to complement the information of the health and status of the URLs. In particular, we look at the urls duplicate.

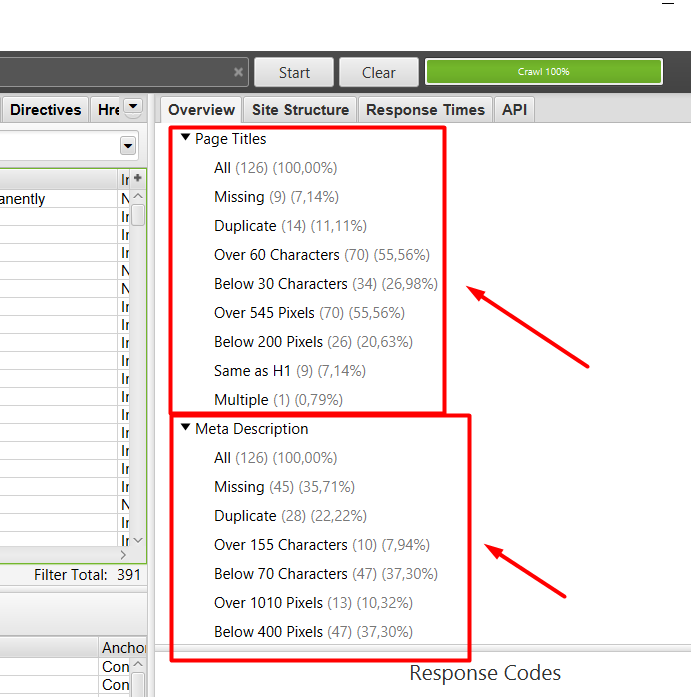

3. Meta tags

The sections that follow, refer to the state in which we find the meta tags of the site. To do this, the following 4 sections, it will be important to see how optimized are these metatags. The sections that should be taken into account, are the following.

- Title

- Metadescription

- H1

- H2

In each of these sections, you will find additional information, that warn us of the following opportunities:

- URLs that do not have the metatag.

- URLs with the metatag duplicate.

- URLs in which the characters of meta over what is recommended.

- URLs with the metatag multiple.

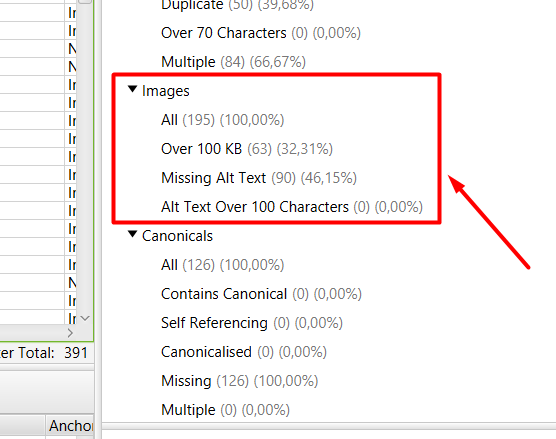

4. Images.

In this section, we are warned of all the possible improvements that we have in the images on our website. To do this, Screaming Frog gives us the following information:

- Total of all URLs that you have the web.

- Images that exceed the recommended weight.

- Images that lack of optimization of ALT text.

- Images with ALT text too extensive.

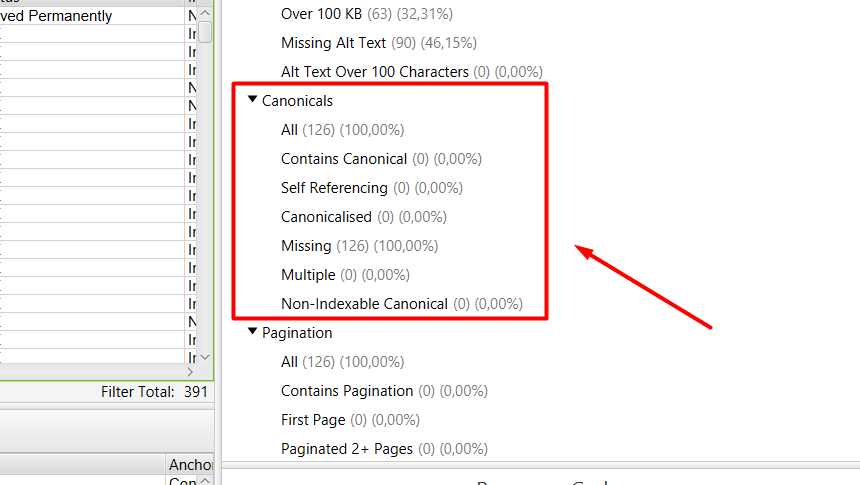

5. Canonicals.

The following section refers to the tags, canonicals of the site. To do this, the information that provides us with Screaming Frog, is the following:

- Total of URLs of the site.

- URLs that contain the tag canonical.

- URLs with tag canonical pointing to herself.

- URLs canonicalizadas.

- URLs that lack of label canonical.

- URLs with tags, canonical multiple.

- URLs with the canonical non-indexable.

Well, once a view of the basic functionalities that we need to know yes or yes to start using Screaming Frog, now let's see the 2 extra functionality that we have prepared, you are going to help you get the most out of information to your web analytics:

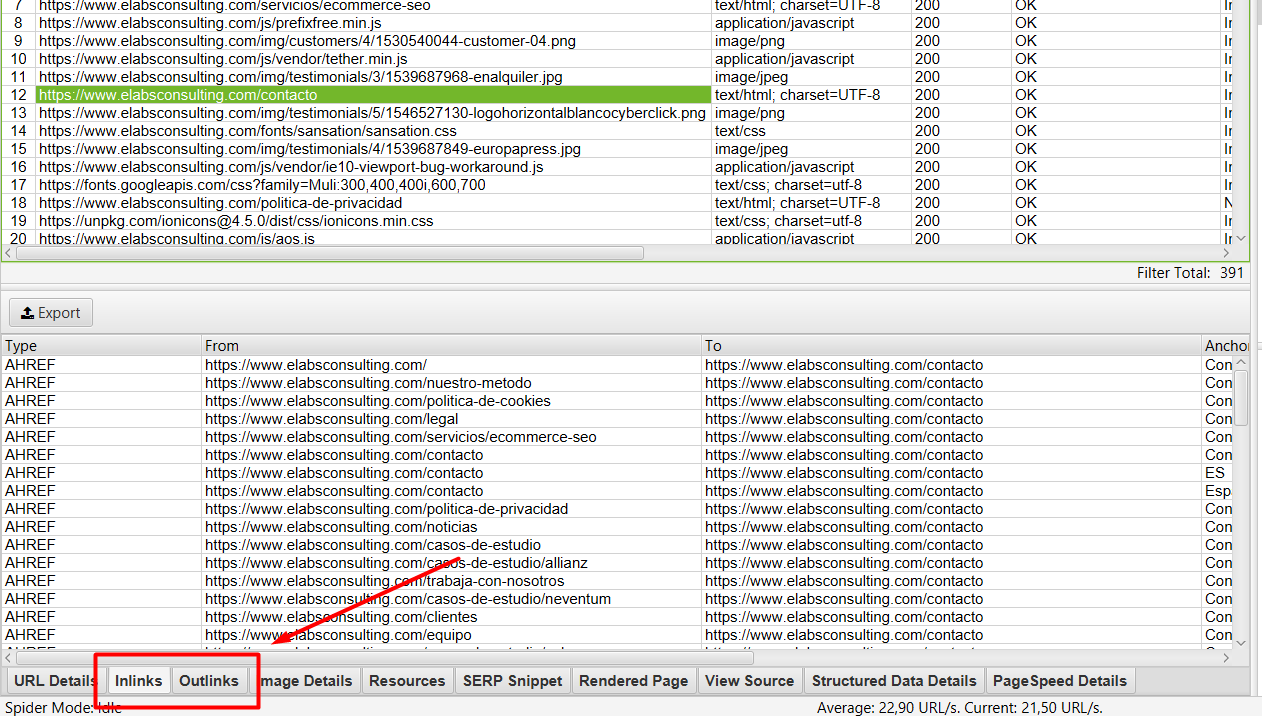

6. Inlinks and Outlinks.

This paragraph we have already mentioned in section 3. And it is that we are going to be of great help, when we have to know which internal pages are pointing to our urls, and why internal pages are being targeted. To all this, we are also going to be able to know by what anchors text are being targeted, and if these links point directly or go through other url as before.

All of this information, find it in the following two tabs (although for that we see the information, we must first have clicked on the url that we want to analyse):

7. To download the data and interpret the results.

Finally, we learn how to export all that information that we go picking up, because at the end it is we will go to the department of programming and/or content for you to carry out the improvements and opportunities that Screaming Frog proposes to us.

So, we have 2 ways to download the data:

Alternative 1:

We selected the information that we want to download (in this case, we are interested in download all the urls with 404 errors), and click on the button of “Export”:

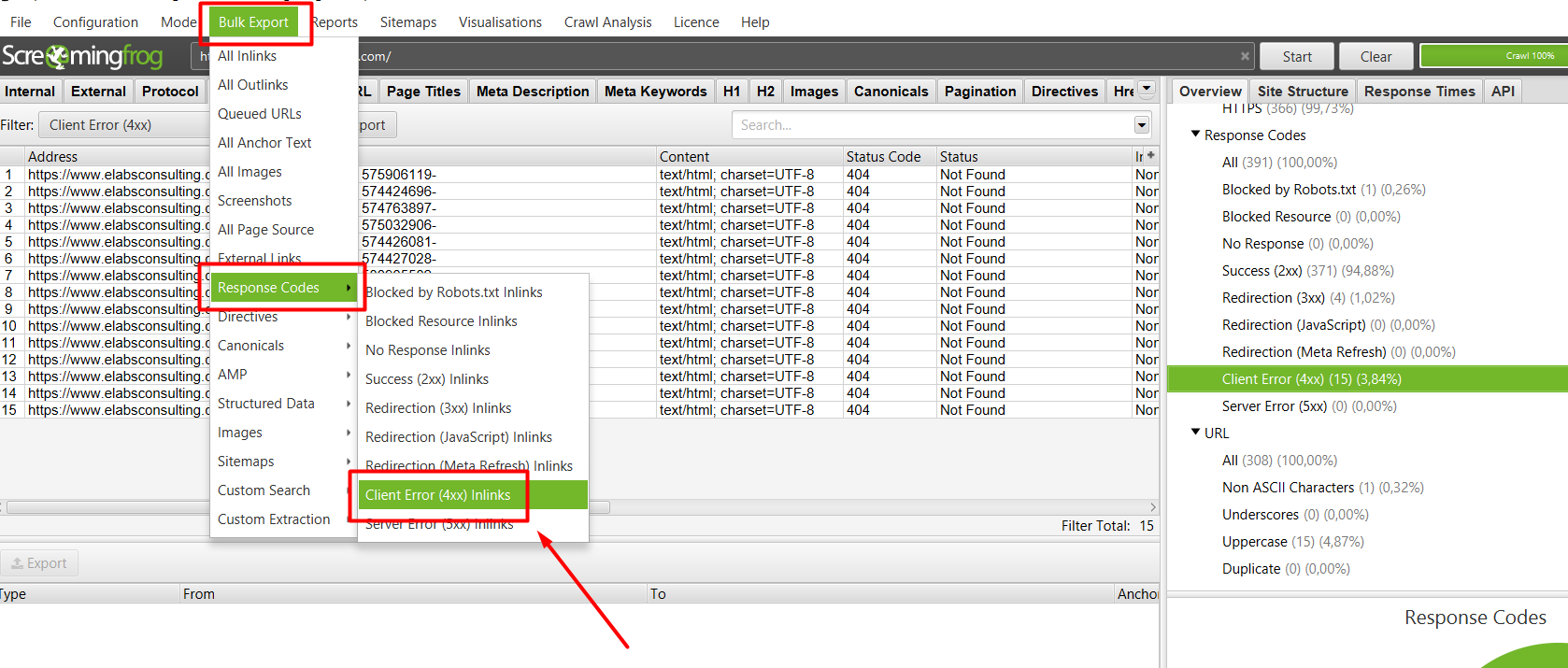

Alternative 2:

With this alternative, it will not be necessary to select the information or urls to download. All we need is to export the data to parallel... and voilà!